How you sense the world can help you understand AI

In my first post in this AI series I commented that I feel a growing concern that many people and businesses are not acting fast enough to heed the AI alarm bells that have sounded. I believe the time to act is now. But what is holding people back? I know firsthand that there are a number of reasons, including the belief that “We have done it, we have rolled out ChatGPT.”, or “We’ve set up a committee and are waiting for their recommendations.”. Fundamentally, I believe that a major factor in the speed of action and commitment beyond these early steps is that many people don’t really understand what AI (or more specifically in this series, generative AI) really is, how it works, and why they should act on it. It’s good that these things have been done, but there are significant risks for not moving further. Many leaders believe we are in another tech vendor hype cycle. Some have been burned in the past and just don't believe there is much to this, or that there won’t be a satisfactory return on investment. Some caution, of course, is warranted, and risk analysis is always prudent to conduct.

So, regardless of where you sit in the decision-making process for what to do with AI, give me 7 minutes of your time to build the next step in an understanding of what’s going on with this technology and why you need to pay attention to it and understand it. It’s all around you, and you may not recognize it. Have you been to a doctor’s office recently and been asked if their AI scribe can record and summarize your visit? What is that scribe doing, and how, and why is the doctor so keen to use it?

This post, and the next few, will provide the grounding you need to understand what the fuss is all about. This is written in technology-lite terminology. Anyone should be able to follow it based solely on how we humans live and learn. In this post you will learn:

how we model the world in order to understand it better

that real-world models can be instantiated in computer software and subsequent processing

that some types of AI leverage a new type of computing that we have not been able to leverage in a practical way until recently – this is one of the primary reasons things are changing – we have a new problem-solving technique and the opportunity to create new things in ways we just did not have only 24 months ago.

How do we learn?

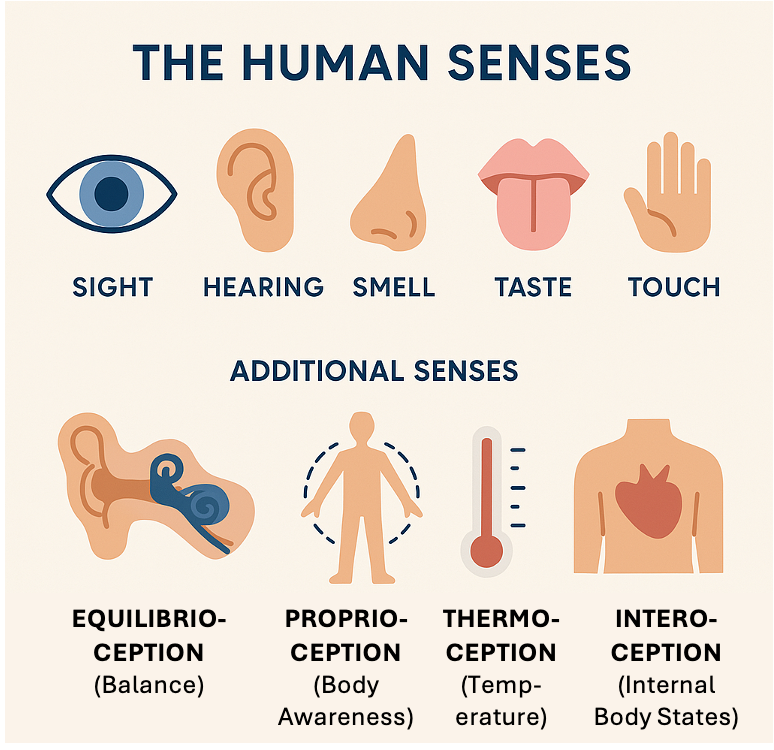

We use our five main senses (sight, touch, hearing, smell and taste, and, well, additional ones that scientists recognize, including equilibrioception (balance), proprioception (body awareness), thermoception (temperature), nociception (sense of pain), and interoception (internal body states) to experience the world. From our earliest moments of existence, we collect and process inputs using our senses and try to understand what they mean. As we grow, we learn in an experiential fashion. For most people, we are also taught in a more formalized, structured fashion, while going to school, reading text, and watching explanatory videos.

Source: Microsoft Copilot/Designer (prompt: What are the senses we use?)

Structured learning teaches us things like language systems, organized through grammar (or models of language), and mathematical systems (numbers, basic calculation operations) and this begins at a young age, typically for some at the ages of two to four, then into pre-school and onto the primary school system.

Experiential learning teaches us from what we experience living day to day in our own environments. Touching a hot stove quickly teaches us that cooking surfaces can burn us instantly if they are hot enough. Plus, our brains learn complex concepts very early. For example, calling someone a nasty name may hurt their feelings (visible through tears or an emotional response).

Aside: there are lots of other learning modes and approaches, including blends of all of them, such as informal learning, inquiry-based learning, collaborative learning, microlearning, and more.

This process of observing with our senses, testing, refining our understanding, drawing conclusions, and internalizing things repeats over and over. Sometimes we don't even realize we are doing this. It's just being human.

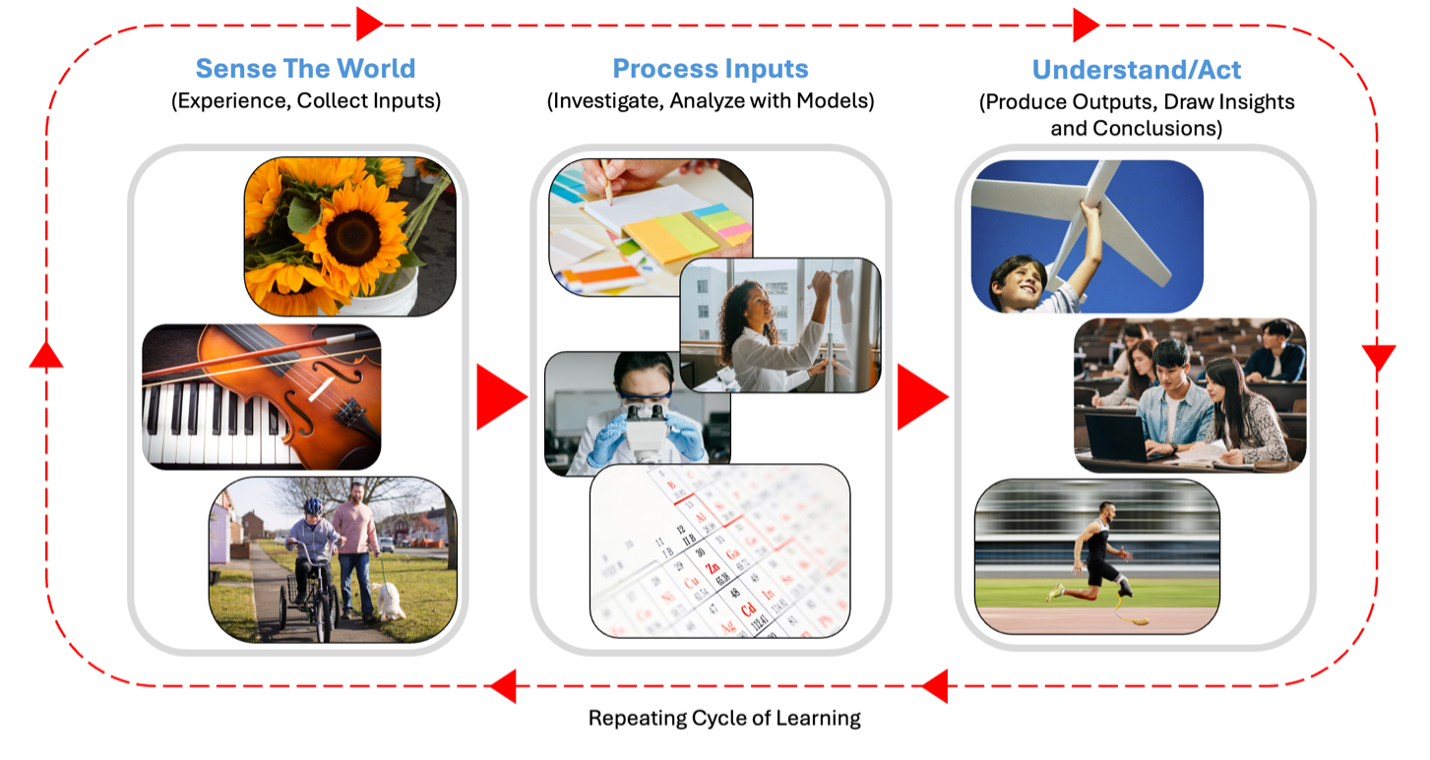

How humans learn

One important reason that we are able to make sense of things so readily is that we have come to learn from and rely on models of “how things work”. In fact, we have modeled many things in our world that give us great insight into how things work. Many of our models are incomplete, some wrong, and most constantly tested and updated. Some of the models are quite formal (think mathematical equations representing gravity) and some are informal (nuanced behaviours that are deemed acceptable or not acceptable in society). Models give us a way of understanding complex things so that we can understand them, evaluate them, test them, and refine them, all by building on understandings that we or others have made in the past.

Periodic Table of the Elements - Source: Microsoft Stock Images

For example, over the centuries we have modeled the chemical elements that we have discovered - think of them as the fundamental building blocks of living things. An element is a substance made of only one type of atom (e.g. hydrogen (H), or oxygen (O)). The periodic table of the elements is a table or chart that documents our understanding of the elements that have been modeled. It organizes all known chemical elements in a systematic way. They are arranged in the table by atomic number, which is a count of the number of protons in the nucleus of an atom, as well as by other factors (e.g. groups and periods). The periodic table is like a map for chemists, helping them understand and predict the behavior of things made up of the elements, either alone or in combination, based on their position on the table.

Music Notation - Source: Microsoft Stock Images

From a very different domain, thinking about music from a model perspective helps us understand it better. Musical chords and note combinations can be thought of as a model for what sounds good together. Here's why:

1. Harmony: Chords are combinations of notes played together, and they create harmony. Certain combinations of notes sound pleasing to our ears because of the way their sound waves interact. For example, a C major chord (C, E, G) is often perceived as happy and bright. Musical notation, as in the image above, is a way of depicting a model of what a composer has created for people to enjoy.

2. Scales and Keys: Music is often based on scales, which are sequences of notes that sound good together. A key is a group of notes that forms the basis of a piece of music. When you play chords and notes within the same key, they usually sound harmonious.

3. Chord Progressions: These are sequences of chords that are used to create a sense of movement in music. Certain progressions are very common in popular music because they sound pleasing and evoke specific emotions. For example, the I-IV-V-I progression is widely used in many songs.

Musical notation has standardized the way we convey these complex model nuances.

We have built many models to help us understand the world:

the Laws of Thermodynamics model the behaviour of energy and matter in a system

we have modeled the skeletal system of the human body to understand how it takes form and supports the entire set of organs, muscles, and systems in the body

we have modeled the endocrine system (hormone production)

the theory of relativity models the behaviour of objects in space and time

a balance sheet models the financial state of a company.

You get the picture (in fact, your understanding of this idiom phrasing - a phrase or expression whose meaning is different from the literal meanings of the individual words - just used, ensures you understand what those four words imply). These models help us process inputs to create outputs that help us understand things (through creating a hypothesis, testing it with some inputs and observing the outputs).

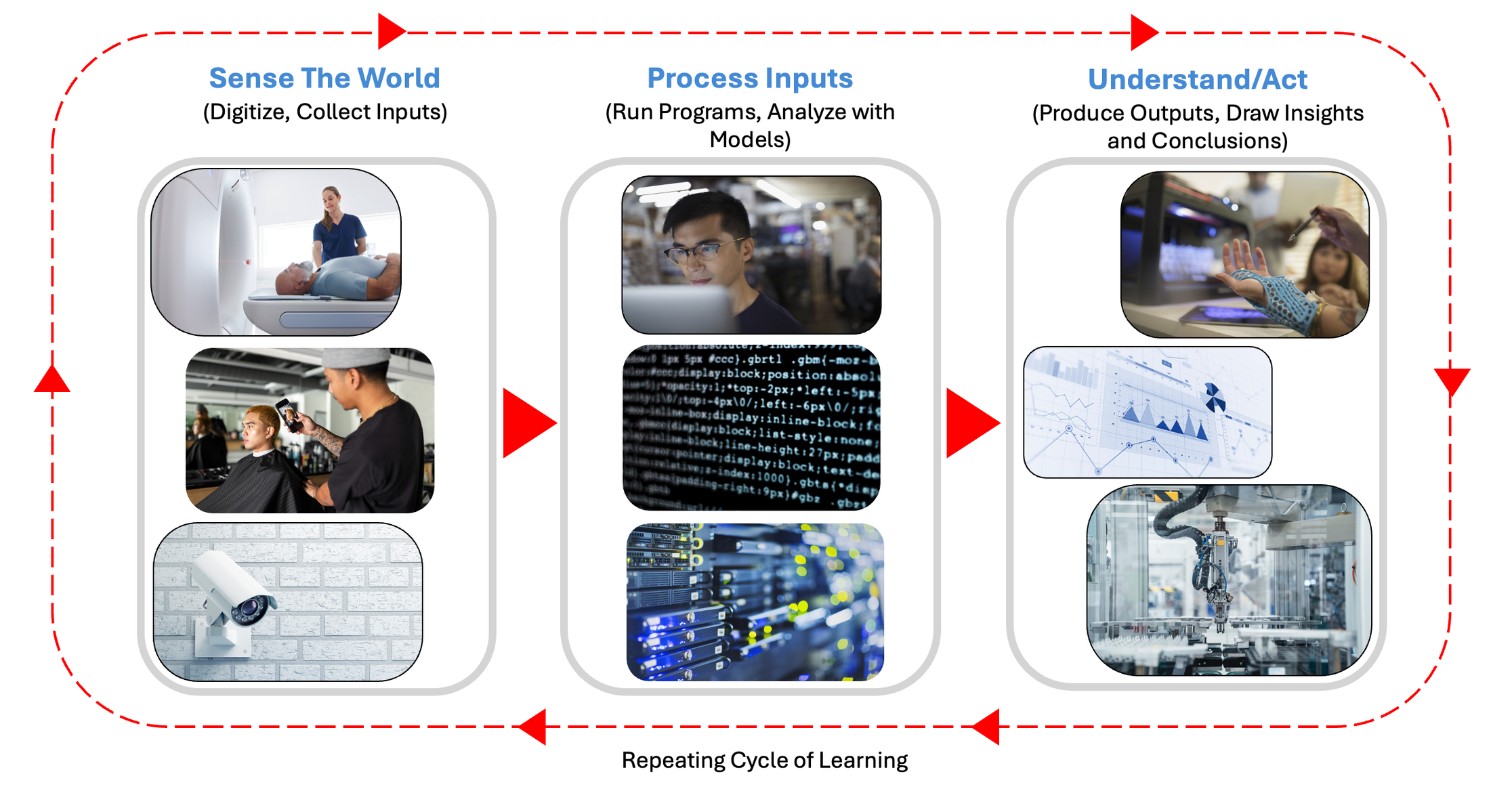

Computers can help us model and understand the world

Just like we have been trained (or have learned) to model the world using structured and experiential techniques, computer systems can also be trained to model the world.

We have come to use computers very effectively by following a very similar process to what we do as humans as we go about our daily lives sensing, understanding, and acting on those results. In the digital world we now live in, we digitize things we can observe to create input data for computers, then we process the data by running computer software programs, and finally create outputs.

These days we have many ways to create input data by a digitizing process. We can:

automatically sense things using Internet of Things (IoT) devices (e.g. temperature or vibration sensors) and turn those measurements into a digital format

take pictures and video with our digital cameras, and in the process storing that data in digital format

speak to our phones through a microphone and digitize what is recorded

type things into the computer using keyboards

Historically, the way we have used computer software programs to process input data is in what is called a deterministic fashion. In this type of computing the outcome is always predictable and repeatable. This means that if you give a computer the same input and run the same program, it will always produce the same output. An example of this is a calculator: multiply the same two numbers together repeatedly on the calculator and you will always get the same result.

Many of the models we have created over the past hundreds of years can be represented by logic in software programs. With those software programs we can supply inputs, process the inputs through the model, and collect or produce outputs.

Digital System Processing in Aid of Learning and Action

Computers are really good at doing this type of work. Not only can they handle immensely large sets of input data, representing many different conditions or perhaps experiments we want to run, but they can also process that information much more quickly than humans (and they don’t tire from rote work). Humans are still comparatively great at evaluating the outputs of computer processing, depending on what we are doing with the outputs, how complex the output is, or what we are trying to understand from those outputs.

But now, the way we get computers to create or use models, one of the major branches of AI work, has enabled a new type of computing that until recently was not practical to do with the computing infrastructure that was available to us. The advent of today’s scalable cloud computing infrastructure, advanced compute capabilities from silicon microprocessors and the availability of immense amounts of digitized information has enabled AI researchers to unlock the power of this method.

So, what is this new type of computing and how does it work?

For most of the past 70+ years in the digital computing age we have used what is known as deterministic computing. This is a type of computing where the outcome is always predictable and repeatable, as was mentioned above using the calculator example. Classical computers are built on deterministic logic. Examples of software to process input and create output in this way include word processors, spreadsheets, operating systems, compilers, and most traditional business software.

In the last 10–15 years, there's been a significant increase in the use of stochastic approaches, especially with the growth of AI. These systems often rely on randomness, probability, and learning from data — which are inherently non-deterministic. Stochastic (or probabilistic) computing has been used in specific domains for some time, such as AI’s machine learning technique (we’ll define that later), simulations (e.g., weather, physics, finance) and cryptography (random number generation). Now, for cases where a lot of data and processing power is needed to produce and leverage models, technological advances have made this practical.

While deterministic computing has been the dominant model historically, which is important in many areas, like scientific research and financial calculations, where you need to be sure that the results are consistent and reliable, stochastic computing is now playing a much larger role, especially in fields where uncertainty, learning, and adaptability are important. As you may have now deduced, generative AI, which has taken the world by storm, is a technique whose time has arrived. It uses both stochastic and deterministic approaches.

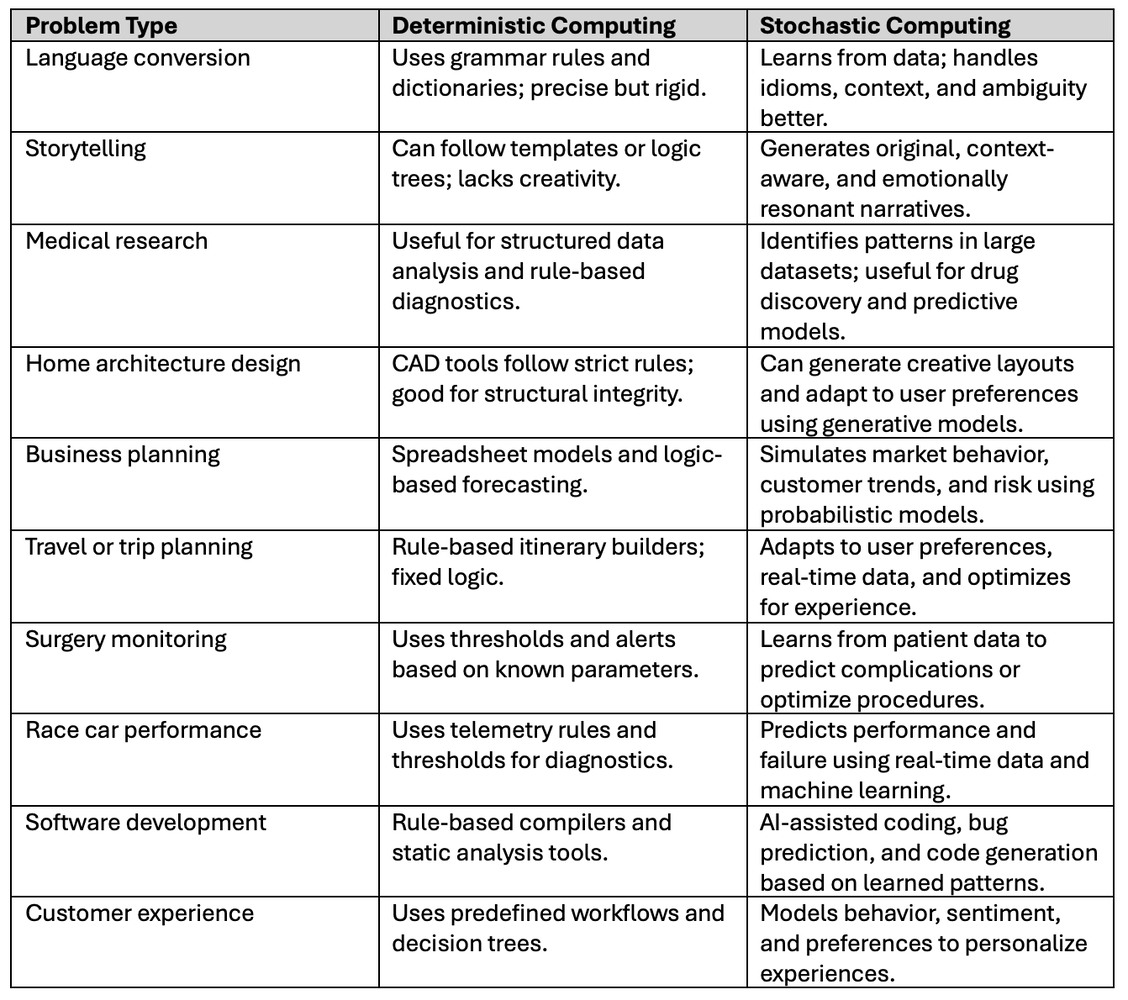

Deterministic and stochastic computing approaches both have their applications and are often used in combination. Here’s a comparative list showing how deterministic computing and stochastic computing apply to various problem types. This highlights where rule-based systems excel and where probabilistic, data-driven approaches are more effective.

Comparison of Deterministic and Stochastic Computing Techniques for Various Problem Types

OK, let’s put together these learnings to take us one step closer to understanding the power of AI

(and why you need to pay attention to what is happening in our digital world which is taking advantage of stochastic computing to solve difficult problems we couldn’t readily solve before.)

Let’s use an example we don’t often think too deeply about.

One of the most important things that we have modeled, in several different ways, is how to express ourselves through written communications. How have we done this? Well, by modeling the rules that govern how letters and words are combined to form meaningful units of written communication, something mentioned above and which we call grammar, we are able to communicate effectively. Grammar makes written communications less ambiguous or confusing. It can also help to avoid writing that is lacking in clarity, tone, and nuance, all of which, without grammar, would make things difficult to analyze or interpret.

Some important things about grammar:

1. Grammar Is language-specific - every language—whether spoken or written—has its own set of grammatical rules that govern:

word order (syntax)

word formation (morphology)

tense, aspect, and mood

agreement (e.g., subject-verb, noun-adjective)

punctuation and orthography (in written form)

2. Writing Systems Reflect Grammar Differently

alphabetic systems, like English, Spanish, and Russian, represent sounds and often require punctuation and spacing to reflect grammar

logographic systems (like Chinese) use characters to represent words or morphemes, and grammar is often inferred from word order and context

Abjads (like Arabic or Hebrew) primarily write consonants, with vowels often implied or added with diacritics.

syllabaries (like Japanese kana) represent syllables, and grammar is shown through particles and verb endings.

3. Dialects and Variants make for unique grammatical norms

Even within a single language, different dialects or regional variants may have their own grammatical norms, which can be reflected in writing (especially in informal or literary contexts).

Two of the most interesting capabilities that digital technology has brought to us are the abilities to readily convert from one language to another and from spoken language to written language. We can now automatically convert from almost any language to another, back and forth, instantaneously, and from spoken to written forms. Originally, programmers used traditional deterministic ways to convert language using explicit grammar rules for both source and target languages. They also depended on bilingual dictionaries, and syntactic and morphological analysis to do this. This approach worked quite well for well for structured, formal language and closely related language pairs (like Spanish ↔ Italian), but it struggles with idioms, ambiguity, contextual meaning, and informal or creative language. This is where the new stochastic computing approaches shine, and as we’ll see in the next post generative AI uses a combination of deterministic and stochastic computing to do what it does and to address these challenges.

Today’s critical learning…

In a nutshell, deterministic computing works really well for a wide variety of problems where we have models that are well defined. But for language translation, as an example problem, AI developers have been able to improve over the deterministic approaches by using stochastic systems and models (like statistical machine translation and neural machine translation). Stochastic systems (and models) use probabilities, statistics, and prediction and very large datasets to:

learn patterns from real-world usage

handle ambiguity and context more flexibly

adapt to idiomatic and informal expressions

These systems don’t rely solely on grammar rules—they learn from examples, which makes them more robust in practice. So, where might you get a lot of examples of written text to use as a training base for a stochastic system? Why, the Internet, of course.

In the next post we’ll look at the generative AI phenomenon and its commercial success through ChatGPT and other solutions, and, importantly, how these systems use models and stochastic approaches to transform the way we use digital technologies and live in our world.